The audio sounds like it’s being played underwater. Still, it’s a first step toward creating more expressive devices to assist people who can’t speak.

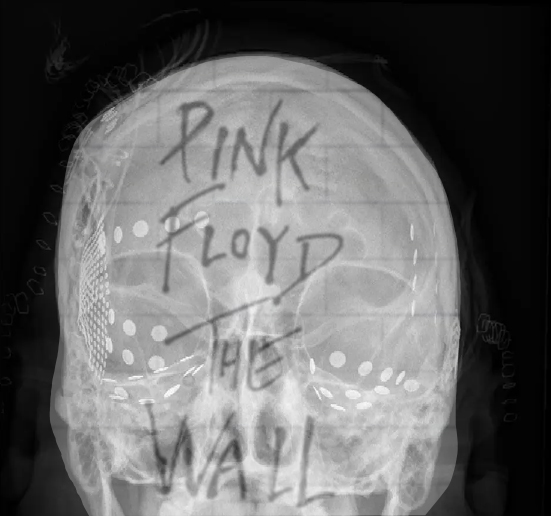

Scientists have trained a computer to analyze the brain activity of someone listening to music and, based only on those neuronal patterns, recreate the song.

The research, published on Tuesday, produced a recognizable, if muffled version of Pink Floyd’s 1979 song, “Another Brick in the Wall (Part 1).”

Before this, researchers had figured out how to use brain activity to reconstruct music with similar features to the song someone was listening to. Now, “you can actually listen to the brain and restore the music that person heard,” said Gerwin Schalk, a neuroscientist who directs a research lab in Shanghai and collected data for this study.

The researchers also found a spot in the brain’s temporal lobe that reacted when volunteers heard the 16th notes of the song’s guitar groove. They proposed that this particular area might be involved in our perception of rhythm.

The findings offer a first step toward creating more expressive devices to assist people who can’t speak. Over the past few years, scientists have made major breakthroughs in extracting words from the electrical signals produced by the brains of people with muscle paralysis when they attempt to speak.

But a significant amount of the information conveyed through speech comes from what linguists call “prosodic” elements, like tone — “the things that make us a lively speaker and not a robot,” Dr. Schalk said.